Face recognition technology has always been a concept that lived in fictional worlds, whether it was a tool to solve a crime or open doors. Today, our technology in this field has developed significantly as we are seeing it become more common in our everyday lives. In the mission of building a truly digital trust system, we at Signzy use Facial recognition technology to identify and authenticate individuals. The technology is able to perform this task in three steps: detecting the face, extracting features from the target, and finally matching and verifying. As a visual search engine tool, this technology is able to identify key factors within the given image of the face.

To pioneer our facial recognition technology, we wanted an edge over the current deep learning-based facial recognition models. Our idea was to embed human crafted knowledge into state of art CNN architectures to improve their accuracy. For that, we needed to do an extensive survey of the best facial feature descriptors. In this blog, we have shared a part of our research that describes some of the features.

Local binary patterns

LBP looks at points surrounding a central point and tests whether the surrounding points are greater than or less than the central point (i.e., gives a binary result). This is one of the basic and simple feature descriptors.

Gabor wavelets

They are linear filters used for texture analysis, which means that it basically analyses whether there are any specific frequency content in the image in specific directions in a localized region around the point or region of analysis.

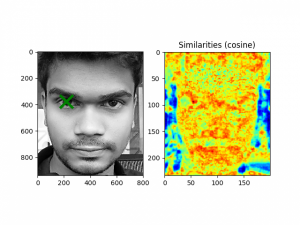

Gabor jet similarities

These are the collection of the (complex-valued) responses of all Gabor wavelets of the family at a certain point in the image. The Gabor jet is a local texture descriptor, that can be used for various applications. One of these applications is to locate the texture in a given image. E.g., one might locate the position of the eye by scanning over the whole image. At each position in the image, the similarity between the reference Gabor jet and the Gabor jet at this location is computed using a bob.ip.gabor.Similarity.

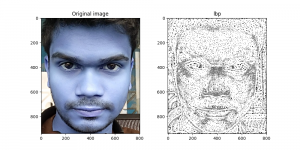

Local phase quantisation

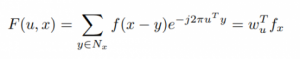

The local phase quantization (LPQ) method is based on the blur invariance property of the Fourier phase spectrum. It uses the local phase information extracted using the 2-D DFT or, more precisely, a short-term Fourier transform (STFT) computed over a rectangular M-by-M neighborhood at each pixel position x of the image f(x) defined by:

where Wu is the basis vector of the 2-D Discrete Fourier Transforms (DFT) at frequency u, and fx is another vector containing all M2 image samples from Nx.

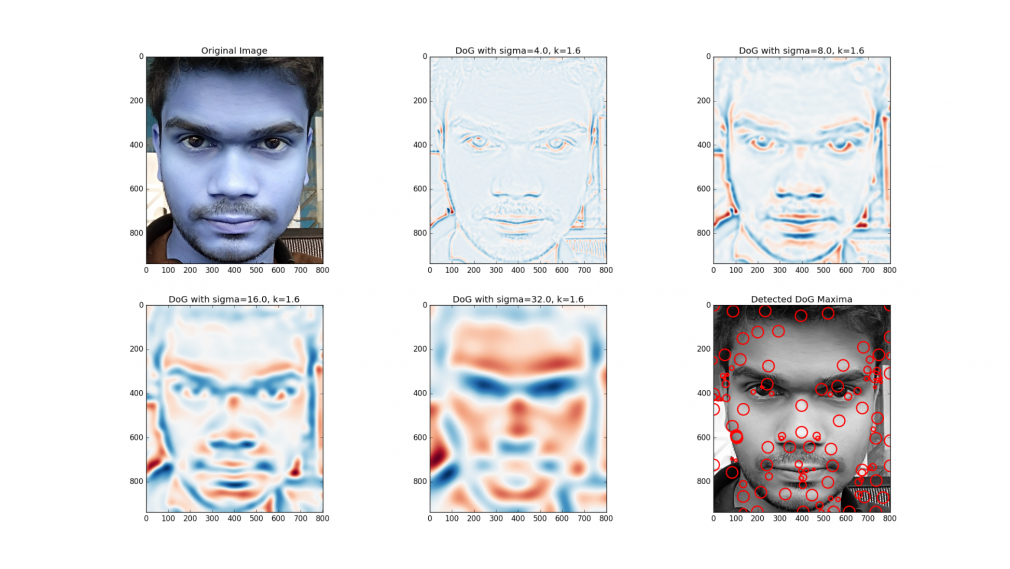

Difference of Gaussians

It is a feature enhancement algorithm that involves the subtraction of one blurred version of an original image from another, less blurred version of the original. In the simple case of grayscale images, the blurred images are obtained by convolving the original grayscale images with Gaussian kernels having differing standard deviations. Blurring an image using a Gaussian kernel suppresses only high-frequency spatial information. Subtracting one image from the other preserves spatial information that lies between the range of frequencies that are preserved in the two blurred images. Thus, the difference of Gaussians is a band-pass filter that discards all but a handful of spatial frequencies that are present in the original grayscale image. Below are few examples with varying sigma ( standard deviation ) of the Gaussian kernel with detected blobs.

Histogram of gradients

The technique counts occurrences of gradient orientation in localized portions of an image. The idea behind HOG is that local object appearance and shape within an image can be described by the distribution of intensity gradients or edge directions. The image is divided into small connected regions called cells, and for the pixels within each cell, a histogram of gradient directions is compiled. The descriptor is the concatenation of these histograms.

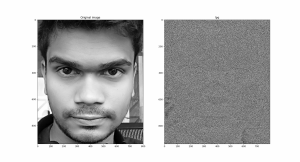

FFT

Fourier Transform is used to analyze the frequency characteristics of various filters. For images, 2D Discrete Fourier Transform (DFT) is used to find the frequency domain. For a sinusoidal signal,

we can say f is the frequency of signal, and if its frequency domain is taken, we can see a spike at f. If signal is sampled to form a discrete signal, we get the same frequency domain, but is periodic in the range

![]()

or![]()

( or for N-point DFT ).![]()

You can consider an image as a signal which is sampled in two directions. So taking Fourier transforms in both X and Y directions gives you the frequency representation of the image.

Blob features

These methods are aimed at detecting regions in a digital image that differ in properties, such as brightness or color, compared to surrounding regions. Informally, a blob is a region of an image in which some properties are constant or approximately constant; all the points in a blob can be considered in some sense to be similar to each other.

CenSurE features

This feature detector is a scale-invariant center-surround detector (CENSURE) that claims to outperform other detectors and gives results in real-time.

ORB features

This is a very fast binary descriptor based on BRIEF, which is rotation invariant and resistant to noise.

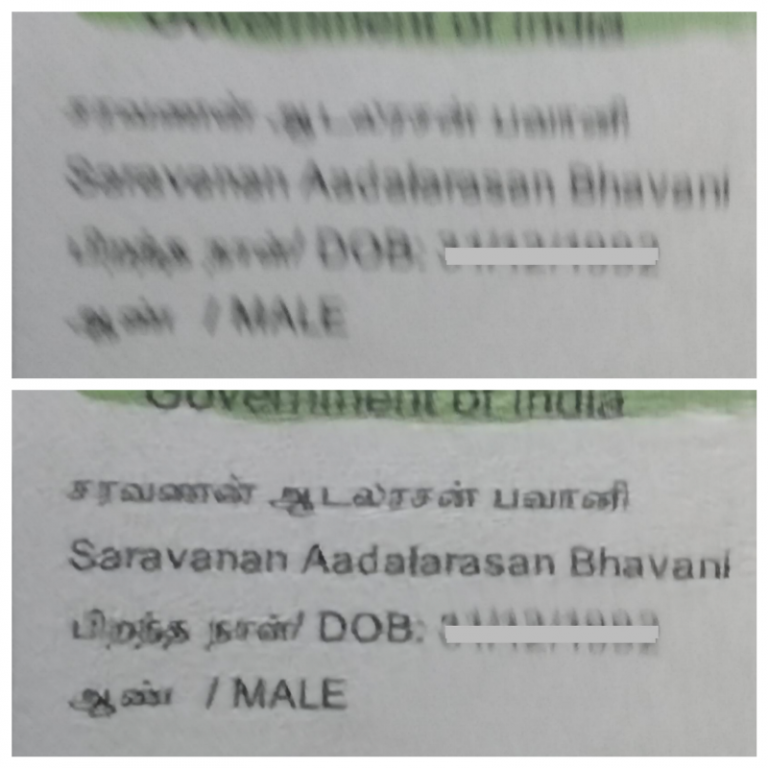

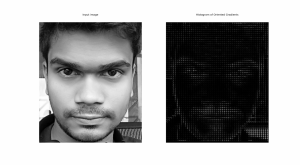

Dlib — 68 facial key points

This is one of the most widely used facial feature descriptors. The facial landmark detector included in the dlib library is an implementation of the One Millisecond Face Alignment with an Ensemble of Regression Trees paper by Kazemi and Sullivan (2014). This method starts by using:

- A training set of labeled facial landmarks on an image. These images are manually labeled, specifying specific (x, y)-coordinates of regions surrounding each facial structure.

- Priors, of more specifically, the probability of distance between pairs of input pixels.

Given this training data, an ensemble of regression trees is trained to estimate the facial landmark positions directly from the pixel intensities themselves (i.e., no “feature extraction” is taking place). The end result is a facial landmark detector that can be used to detect facial landmarks in real-time with high-quality predictions.

Code: https://www.pyimagesearch.com/2017/04/17/real-time-facial-landmark-detection-opencv-python-dlib/

Conclusion

Thus in this blog, we compile different facial features along with its code snippet. Different algorithms explain different facial features. The selection of the descriptor which gives high performance is truly based on the dataset in hand. The dataset’s size, diversity, sparsity, complexity plays a critical role in the selection of the algorithm. These human engineered features when fed into the convolution networks improve their accuracy.

About Signzy

Signzy is a market-leading platform redefining the speed, accuracy, and experience of how financial institutions are onboarding customers and businesses – using the digital medium. The company’s award-winning no-code GO platform delivers seamless, end-to-end, and multi-channel onboarding journeys while offering customizable workflows. In addition, it gives these players access to an aggregated marketplace of 240+ bespoke APIs that can be easily added to any workflow with simple widgets.

Signzy is enabling ten million+ end customer and business onboarding every month at a success rate of 99% while reducing the speed to market from 6 months to 3-4 weeks. It works with over 240+ FIs globally, including the 4 largest banks in India, a Top 3 acquiring Bank in the US, and has a robust global partnership with Mastercard and Microsoft. The company’s product team is based out of Bengaluru and has a strong presence in Mumbai, New York, and Dubai.

Visit www.signzy.com for more information about us.

You can reach out to our team at reachout@signzy.com

Written By:

Signzy

Written by an insightful Signzian intent on learning and sharing knowledge.