You must have seen the term “deepfakes” in the headlines!

As crimes arising out of deepfakes have become a new trend!

As per research reports, there has been a surge of 230% in deepfakes crimes since 2017.

Reason?

Ease in accessibility of generative AI technologies.

Let’s first deep dive into what are deepfakes.

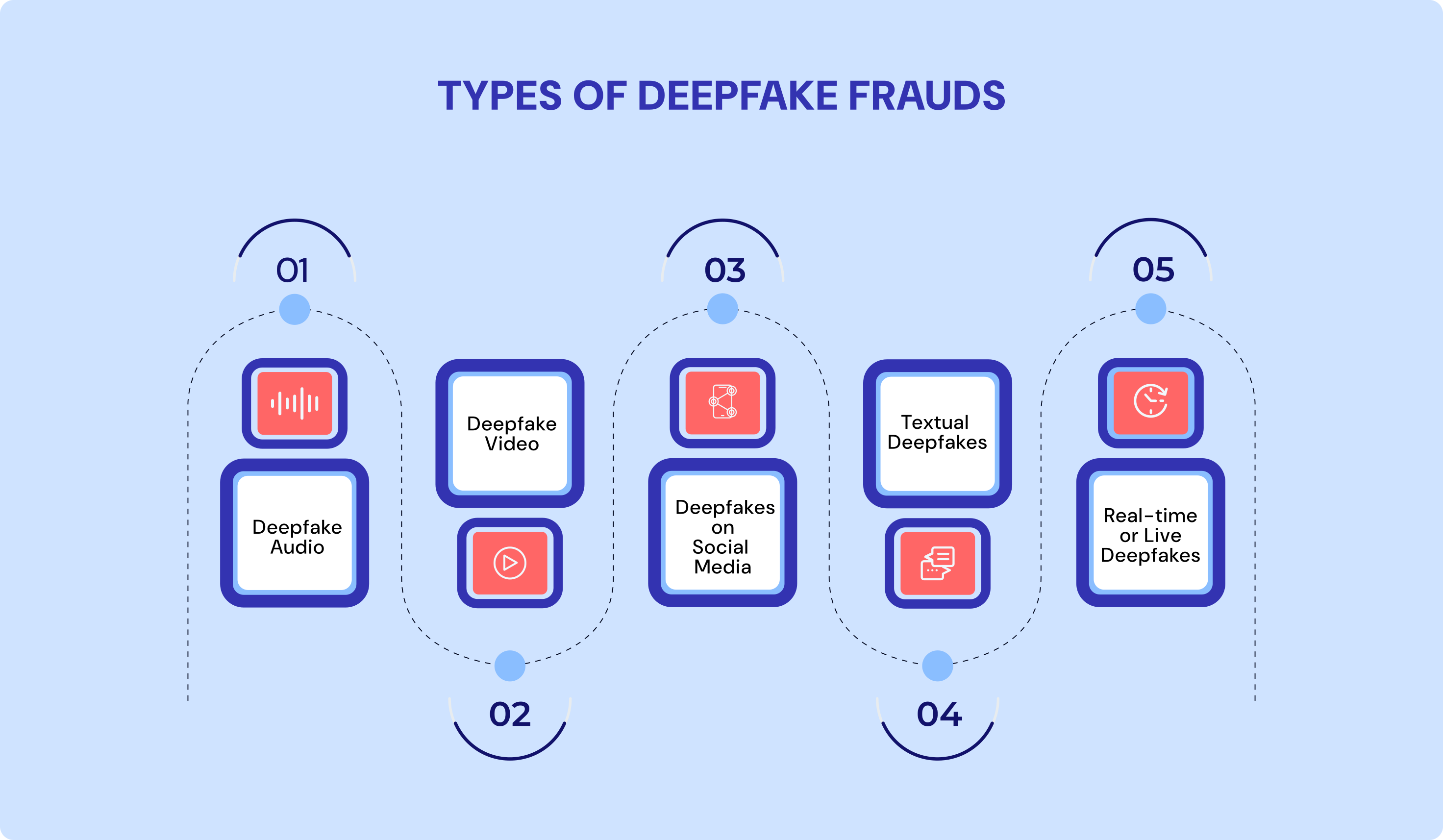

Deepfakes are videos or audios created using AI in which the face or voice of a certain person is edited to give the ‘unreal’ a real touch.

To give that touch, fraudsters need images/videos to base their deepfake on.

Using these data and algorithms, the technology behind deepfakes how to imitate a person’s voice, facial expressions, motions, gestures, intonation, and vocabulary.

Photoshopping of photos/videos is not a new thing.

Then, what’s new about deepfakes?

Deepfakes use AI and machine learning tools to create or edit content so precisely that it becomes incredibly tough to differentiate from authentic content.

Many organizations and businesses wonder if these AI-generated content put eKYC processes at risk and if such videos could be produced to forge personas in client engagement processes.

Such fraudulent attacks are on the rise due to the surge of AI-powered apps capable of creating deepfakes.

Unfortunately, not everyone can avoid falling into such traps.

There happened an incident in 2019 where a British energy company staff was scammed $250,000 (~ Rs 20.6 crores) using a deepfake voice impersonating the CEO of the group holding company.

Scamming people using deepfakes has now become a regular thing.

High-tech companies are responding to the rising crimes, albeit slowly. The issue is that deepfakes are difficult to detect because sometimes visual forensics experts are unable to find anomalies and glitches in the forged content.

Deepfake creators have outpaced the technology that helps in identifying manipulations in the content.

Now the main question arises.

How deepfakes can harm organisations?

Firstly, adverse effects on their reputation – high-risk stake for businesses.

Showcasing false interactions between an employee and client can potentially harm the organisation’s credibility, resulting in client loss and monetary damage. To protect organisations from such deepfake mishaps, close monitoring of online mentions and posts about the brand is very important.

Secondly, the dissemination of false information about the organisation by improperly utilising the deepfake technology.

💡 Related Blog: What are Deepfakes in the AI world?

There are instances of deepfake fraud calls where the organisation is being misrepresented to obtain sensitive information. People impersonating as personnel from travel agencies, loan providers, customer helplines etc., use voice replicas or deepfake-oriented video calls to represent themselves as belonging to authenticate organisations while in actuality fooling people.

How to prevent deepfake frauds – Biometric, data verification APIs?

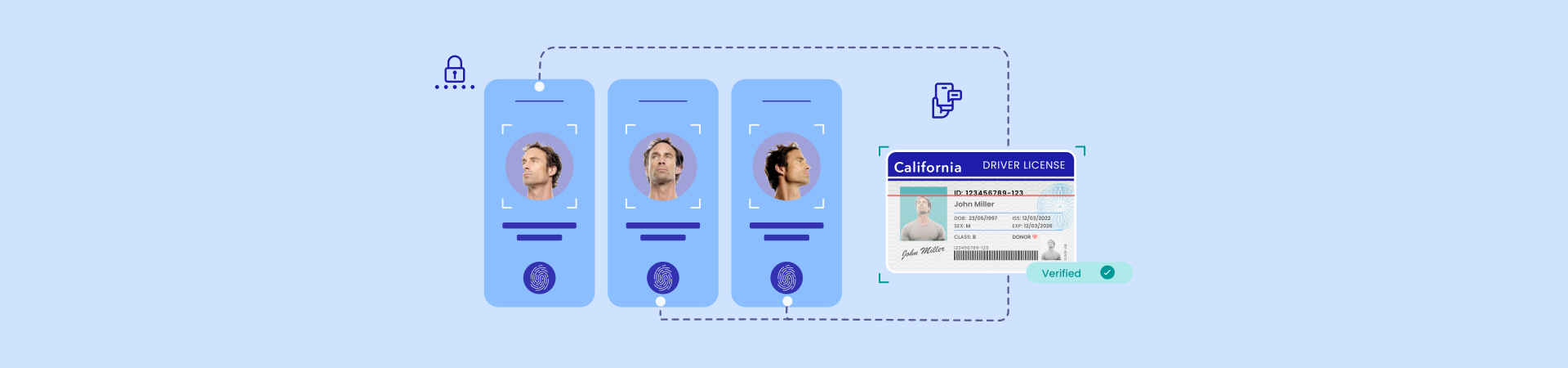

In many eKYC processes, individuals are usually asked to submit their ID and take a selfie – which goes through a face identification process after that for evaluation and is matched against the photo shown in the ID. The data gathered and archived from successful and unsuccessful identification processes will be used to perform a match later.

This process includes liveness detection which typically prompts the individual to execute an action to prove that they are alive. Motion prompts range from basic head swings to blinking or smiling.

Liveness detection makes sure that the biometrics obtained are from a living person hence restricting the use of static photos or videos. Furthermore, behavioral biometrics examine distinct trends in individual activity, for instance typing or swiping gestures, providing an additional layer of security.

Anti-spoofing tools are used to flag presentation intrusions and determine whether biometric data corresponds to a real person or a fake identity. This can include techniques such as asking the individual to blink or smile when asked to.

Multi-factor authentication (MFA) may also be employed in the eKYC process to enhance security. It uses several authentication elements to ensure that an individual’s identity is genuine. The most popular method combines biometric confirmation like face identification or fingerprint scan with recognition factors like OTPs.

Video verification procedures for onboarding clients are supported by professional, and biometric processes that can verify the identity of individuals using advanced techniques and can determine whether the recording is in real-time or system-generated.

In live video identification during a video call, it is not possible to fool the system with the help of deepfakes. Possibly due to automated and manual checks designed to detect such spoofing procedures.

Good AI vs Bad AI – How can Signzy help your organisation detect fraudsters?

As said earlier, fraud tools are evolving in tandem with fraud detection techniques.

Fraud is evitable in the AI world,

But that doesn’t mean that we cannot do anything about it,

we can adapt.

Since preventing fraud is always better than taking an action after its occurrence, it is always advisable to implement a reliable tracking system that archives individual information for your organisation.

Why?

To review past fraud incidents and plan out how to safeguard your organisation in the coming time.

Signzy can help you to recognize whether you are dealing with a jest or with the manipulation of someone’s persona. With the help of our automated KYC services, we will detect and prevent deepfakes from being used in your client onboarding process.

The technology behind deepfakes is expected to progress further in the future. Unfortunately, this will make it even easier for fraudsters to apply their criminal mind behind synthetic or stolen identities – even when KYC checks are performed using different forms of identification.

This highlights why it is more necessary now than before to have a multifaceted anti-fraud approach. The fraud detection team must be able to identify different types of suspicious signals or hints that could indicate the occurrence of financial crime at a financial institution or marketplace.

By combining biometric verification, ML fraud detection, and frequent algorithm updates, Signzy services offer powerful safeguards against the risks arising from the advancement of deepfake technology backed by AI.

While developing AI technology to combat fraudulent activities, it is equally important to address ethical considerations. Ensuring that AI systems are transparent, reliable, and free from manipulations is crucial to winning public trust and loyalty and helping businesses boost conversion rates.

To counteract the increasing threats posed by AI-driven tech, organisations must take proactive actions to protect their eKYC processes. By leveraging AI and deep learning algorithms, it is feasible to maintain the authenticity of digital identities and create a more safe digital environment for all.

Committing to continuous improvements and safeguarding the KYC compliance of our customers is the purpose of Signzy’s dedicated eKYC developers.